It’s still always confusing the first time you start with Airflow. Why is a DAG not triggered when my scheduled time arrives? Why a DAG is not triggered at all saying “the task start time is later than execution time”? My CRON job ranges from hours to months, how I should dynamically set up the start time? I asked myself all these questions when I was working with Airflow, and here I just want to make it a record of my understanding. The code snippets included in the article are tested on Airflow 2.2.5 + Composer.

Airflow scheduler

Apache Airflow is a powerful tool helping you to orchestrate and schedule your data pipelines. The key component here is Airow scheduler: Airflow scheduler monitors all tasks and DAGs, then triggers the task instances once their dependencies are complete.

Airflow scheduler monitors all tasks and DAGs, then triggers the task instances once their dependencies are complete.

For a scheduled DAG to be triggered, one of the following needs to be provided:

- Schedule interval: to set your DAG to run on a simple schedule, you can use: a preset, a cron expression or a

- Timetables. “Timetables” is a new concept introduced in Airflow 2.2. This provides more advanced scheduling possibilities, for example, if you want to “skip” some certain runs. However, this is not going to be part of this article 🙂

3. Data-aware scheduling with Dataset. This is also a new feature introduced since Airflow

Once we have our schedule ready, we have an expectation on when our Airflow DAG will run. But sometimes we found it not align with our expectation. Why? In this article, we will mainly discuss the case when you set your schedule with a CRON job (and some cases also applies to a preset)

Data interval, logical date, run time

There are several concepts are important if you wish to understand when your DAG will be scheduled.

- start_date: start date defines when your DAG will run for the rst time. a DAG run will only be scheduled one interval after

start_date - data interval: Data interval describes the time range between two DAG runs. For example, if you define the schedule with 30 22*** (22:30daily),thenyourdataintervalwillbefrom22:30 to the same time next date. There are two values associates to the concept:

– logical_date / data_interval_start: this was used to be called execution date until Airflow 2.2, but as the value indicates thestart of the data interval, not the actual execution time, the variable name is updated.

– data_interval_end: the end of the data interval

DAG run time: this is the actual DAG execution time. And according to Airflow’s official document:

A DAG run is usually scheduled after its associated data interval has ended, to ensure the run is able to collect all the data within the time period.

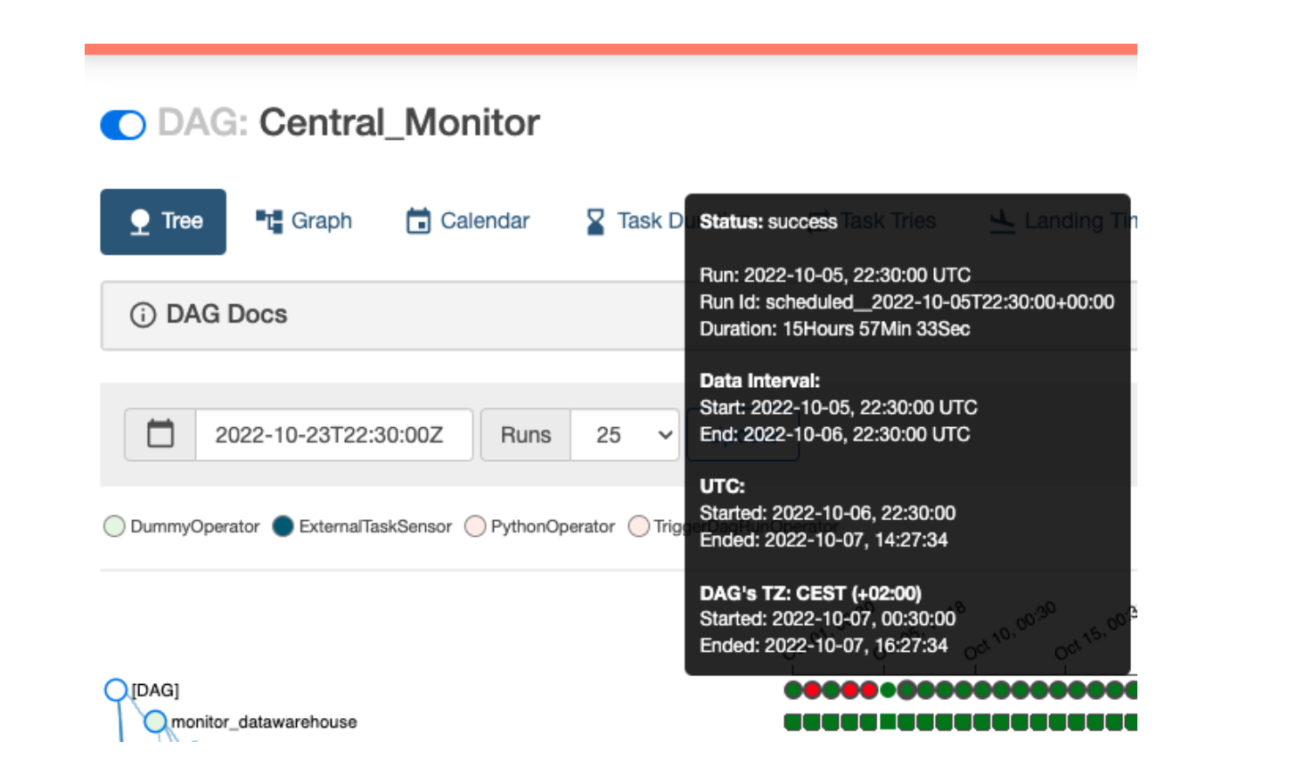

For example, a DAG with schedule 30 22 * * * , you can clearly see in the screenshot:

– data_interval_start: 2022–10–05, 22:30

– data_interval_end: 2022–10–06, 22:30

– DAG run started: 2022–10–06, 22:30

What about start_date ? According to documentation:

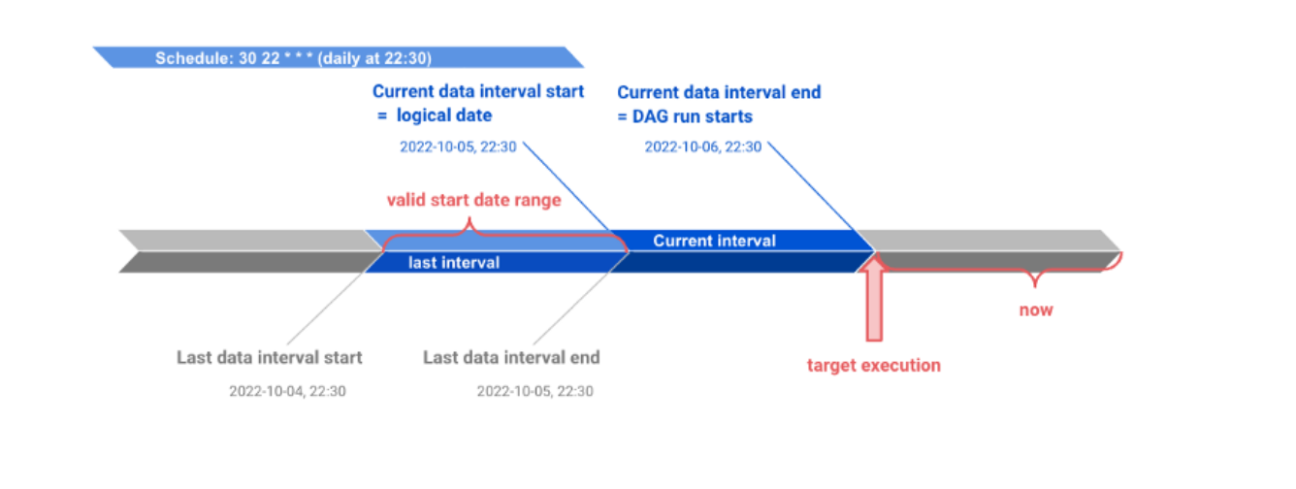

Similarly, since the start_date argument for the DAG and its tasks points to the same logical date, it marks the start of the DAG’s rst data interval, not when tasks in the DAG will start running. In other words, a DAG run will only be scheduled one interval after start_date.

Basically, if the start date is earlier than the data_interval_start, the DAG will be scheduled.

Start date for equally spaced interval

So, how to set a start date?

The simplest is to set a fixed date, e.g. “2020–10–01”. However, if you set the start_date too early, and have your backfill flag enabled, then the DAG run will catch up and initiate multiple runs from that datetime. This will also happen if you recreate your Airflow cluster, or happened to delete all your run history, thus, can be annoying.

And, if you set it too late, you might see the error “the task start time is later than execution time” so that the DAG is not started.

If I want to set a start date, which makes the DAG run exact once as soon as the DAG is submitted, regardless of the schedule, what time should it be?

This is easy to understand once we understand the data interval logic. Basically, you need to set the start_date before the current, and after the last . The timeline is shown as the figure below:

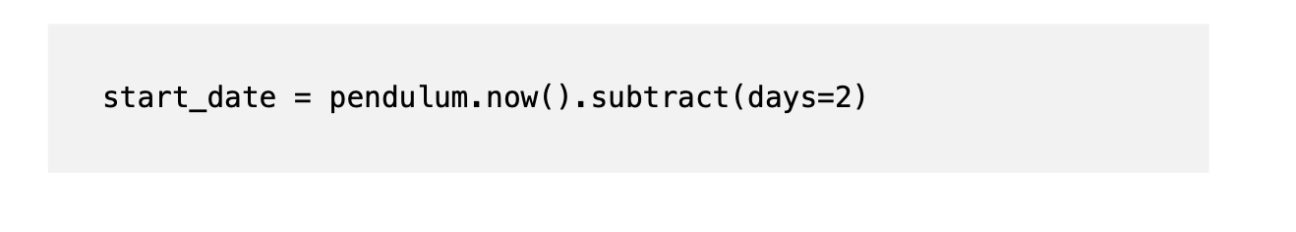

Thus, for a daily scheduled DAG, the start_date is safe to set 2 days ( = 2 intervals) ahead of current time. Similarly, for an hourly scheduled DAG, the start_date should be 2 hours ( = 2 intervals) ahead of the current time. Example for the daily schedule:

Timezone

By default, Airflow applies UTC timezone. This applies either you schedule with a preset like @daily , or a cron job like 30 0 * * * (at 0:30 daily). That is to say, so far the DAG is not timezone aware.

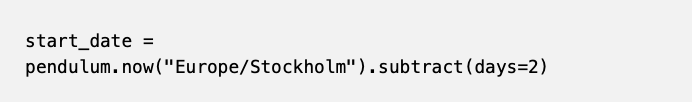

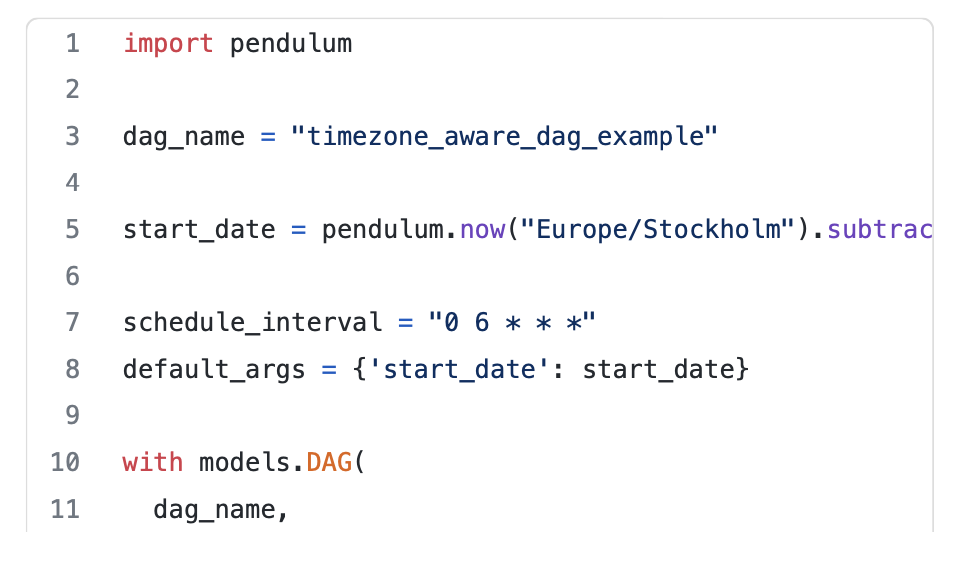

How to set it? The time zone is set in airflow.cfg . But a quick hack if you want to apply the timezone to individual DAGs, is just to apply the timezone on start_date . For example:

Works like a charm. Pendulum is pre-installed with Airflow so you can simply import the library. The DAG looks like:

Start date for unequally spaced interval

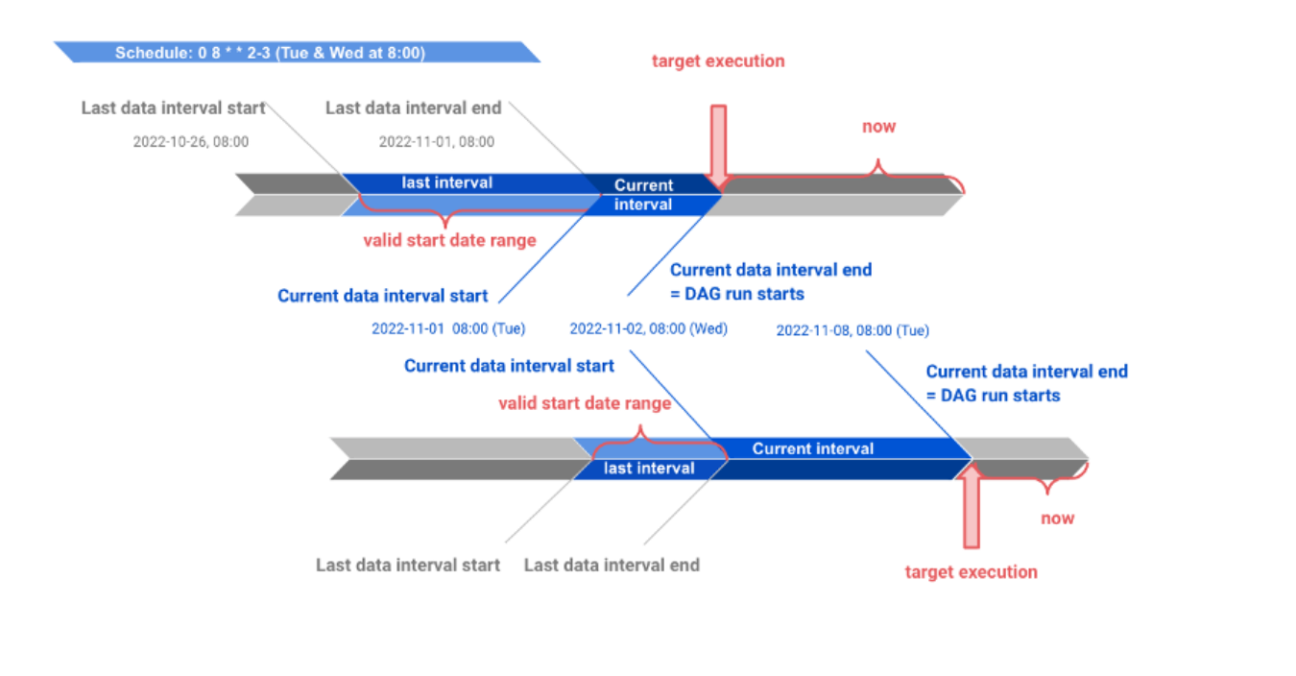

Now we want to move on to set up a start date dynamically for an unequally spaced interval, for example, let’s say: 08 * * 2-3 . This schedules a DAG to run 8am every Tuesday and Wednesday.

Exactly the same as before: If I want to set a start date, which makes the DAG run exact once as soon as the DAG is submitted, regardless of the schedule, what time should it be?

The trick is the same just as setting with equally spaced interval: find the data interval start. The figure below shows what are the valid start_date ranges for two different target executions:

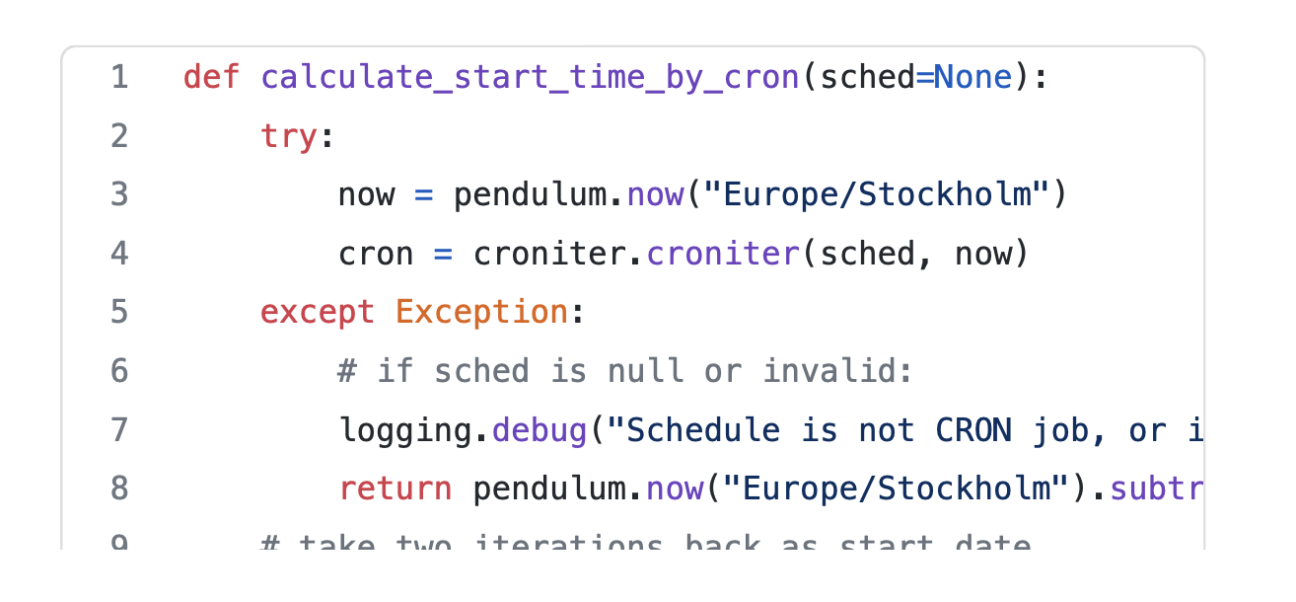

An (almost) universal solution to set up start date with CRON job

One of the benefits of setting a dynamic start_date that makes the DAG run exact once as soon as the DAG is submitted is that: when you need to upgrade/recreate your Airflow cluster, you don’t need to worry about if a scheduled DAG has backfill configured, the problem we discussed earlier with a fixed start_date .

And the trick is straightforward: find the The code snippet shows below:

So, why almost? This is not catching up Daylight Saving Time (DST) when one hour just pop out/disappear from nothing during the day. There is workaround to avoid this problem. But my suggestion here will be: to keep it simple, avoid it, don’t set your schedule around the certain clocks.