AI Applied

SMART is the word embodying CES 2018. By Smart we mean applied AI. In this post, this broadly covers machine learning, neural network, deep learning. Today, in its more or less advanced forms, applied AI is giving a growing swarm of gadgets new and lifelike capabilities. The cliche use of “smart” from previous CES years altered as we toured the exhibitions of CES 2018. It grew realistic as we saw companies presenting real-life applications in tracks and sessions. It became awesome as self-driving cars and buses roamed the streets of Las Vegas. And awe-inspiring but also concern-awakening as we saw robots adapting to mood and behavior of people whom it interacted. Four days in Vegas, four tech scouts from Jayway. Here is a recap of four top themes: smart vehicles, smart robots, smart cities and smart gadgets. In the first part of this blog post we start with smart vehicles and robots.

Smart vehicles

In stark contrast to BMW burning rubber with test drivers in M3’s outside the Las Vegas Convention Center, the shiny pavilions inside the center demonstrated clean and polished experiences on the future of driving and transit. Among the most fascinating concepts we found Toyota’s introduction of the autonomous vehicle concept e-Palette. This concept was launched in collaboration with Uber and Amazon. Sarcastic tweeters in hordes were quick to write “you have invented a bus”-type messages. However, after the announcements, it was easy to see how the true innovative value of Toyota’s concept. It is a class of vehicles that can fit a range of purposes — from microtransit to goods transportation. They can even be joined together and form a fleet. Imagine a mobile medical squad for disaster deployment or Burning Man type venues for entertainment festivals.

Hyundai’s futuristic car concept on display also triggered fascination. They offered a prototype of a future vision of driving. This entailed automatic identification with faceID type features for car-driver adaptation with a fancy facial recognition animation. It had a pop-up steering display and controls activated if driver shifted between autonomous and manual/semi-manual mode. And naturally there was voice control to to ease UI inputs, along with hub controls and displays with large curved visual interfaces.

Outside the LVCC the futuristic glimpses we had seen from the inside were quickly given reality. This happened as we saw the eight Aptiv and Lyft’s self-driving car’s picking up passengers and driving them to 20 CES related destinations. We did not take the time to have a ride, but a Danish journalist prioritizing the 3 hour wait, had the following verdict: quite dull and uneventful — just as it should be.

In terms of beauty and autonomous vehicles, we had all smiles when we found the beautiful Fisker vehicle from danish designer Henrik Fisker. His iconic lines can be found in designs of the BMW Z8 and Aston Martin DB9. The Fisker Emotion clearly scored the price in aesthetics of the e-cars there. Ahead it will be interesting to see how it will hold up in tests against Tesla and what we expect will come from Chinese Byton. It will be some time still until we meet this beauty on the roads, as the fancy Falcon doors are still not working according to the Verge.

No doubt, electricity has solidified as energy source for cars. Emerging now are is also a growing number of players exhibiting battery driven scooters and motorcycles. We did not see any self-driving yet, but can already now start imagining what will be brought forward next year as the autonomous driving platforms and hardware such as NVIDIA DRIVE matures further. What we did see was an exceptionally well executed electric scooter concept by the Luxembourg based company Ujet. They have developed a scooter which easily folds to a carry-on size roller-case. What impressed us with Ujet was their “Apple’ish” execution. Clean, purposeful and hidden complexity were key words. The software of the scooter screens was very nicely designed and fit to purpose. The design and quality line followed through from this to the companion apps for the scooter, the VR tour of the factory and an AR e-commerce experience. We loved it — a definite highlight!

The innovation wave on self-driving cars is happening right now. It is moving fast and faster than many previous technologies in the car industry. It became clear it is commoditizing extremely rapidly. The reason? Platforms combining hardware and software for self-driving cars are becoming turnkey solutions. NVIDIA, most known as a graphics card company, has used AI’s need of parallel computing superiorly served by parallel processing GPU to climb to a new rise.They have re-emerged as a fantastically strong contender in the AI space for autonomous cars, robotics and smart cities.

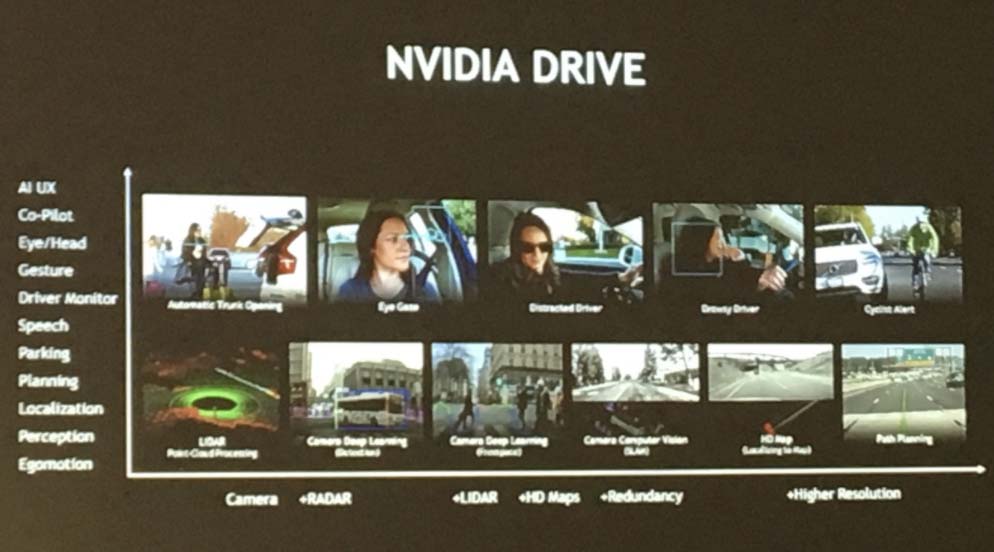

Already, NVIDIA is serving their DRIVE platform technology to more than 20 car makers. This platform includes an AI supercomputer the size of a palm, which both can do edge and cloud based computing, tackling streams from up to 12 cameras while simultaneously processing inputs from LIDARs, radars, and ultrasonic sensors. It also includes a software suite combining all three key areas of self-driving cars with DRIVE AV (autonomous vehicle software), DRIVE IV (intelligent experience software) and DRIVE AR (augmented reality software). Combined this enables powerful development on top of the hardware, including an SDK type experience for creating an AI co-pilot. The NVIDIA platform’s next frontier is achieving level 5 autonomous cars scenario with HD resolution processing, ever higher car-in-context resolution and awareness (as seen in this slide picture from the NVIDIA presentation).

It is impressive how technology for autonomous vehicles has progressed over the last years. We have moved from R&D set-ups where test cars with sensors and cameras produce a total of 2.5–5GB of data for processing every second. Yes, every four to five second filling the SD of a typical SLR! Today, the algorithms made on the enormous data processing offloaded of these test vehicles have become sufficiently tweaked to have edge processing onboard the hardware of vehicles themselves. The continued higher fidelity and tweaks to smart vehicle technology and their gradual out-phasing of manual cars will change fully how we think about driving and urban space. Just in terms of efficiency gains we can await 30–40% better lane utilisation – essentially adding a lane to our current roads. After all computers are not claustrophobic. For a sober view of current state, we encourage watching this video to see the highlights of NVIDIA’s CES launch event.

We were also impressed by Mercedes’ launch of their new MBUX — the user experience for the onboard infotainment system. This is also powered by NVIDIA DRIVE technology. It was easy to understand why also this system benefits from the gains in GPU based computing power, and how edge and cloud computing is combined to enable the future of driver experiences in multi-interface environments where interactions also adds voice. The presentation of the MBUX system can be found here.

Looking back at what we saw in Las Vegas on self-driving vehicles it was a feeling as if progress is a well bit ahead in the US compared to our home turf. At least in terms of on-road testing. We also see how China is throttling up — especially witnessed by the launch of the Byton SUV. We know Volvo is racing at full pace with NVIDIA and Android. However, it would from a Scandinavian perspective — given our traditionally well built out public transit systems — be fun to see a wider effort on multi-modal smart city transit where not only individual car makers and the “Lyfts and Ubers” are driving the initiative. Welcomed to see would be cross-stakeholder approach engaging in more brand independent smart city initiatives. Nevertheless, Jayway took well notice of the developments ahead, and what opportunities on software side the new launches and platforms in the autonomous vehicle space will mean going forward.

Smart Robotics

As the grip on autonomous vehicles is getting firmer, it is simultaneously paving the way for the next frontier autonomous and AI powered robots. Yes, we did mention fascinatingly creepy earlier, and this arose mainly from what we saw in terms of AI Robotics. We did indeed miss out on the pole dancing robots reported by Mashable, but found other fascinating robot applications, including the Segway Loomo Android powered robot, combining a hoverboard with a “robot friend”, and the walking robot Walker from Ubtech.

We also saw the LG Cloi robot — the unplanned centerpiece of LG’s unveilings during CES connecting robotics and the SmartHome. Epically failing the US marketing chief of LG on commands in the worst Murphy’s Law reminding way, it certainly exemplified that software controlling robots is tremendously difficult to get right. At CES some had it more right than others. Poor Cloi, though… Hopefully the LG robots clean better than they voice respond, and this cutie can rise in 2019 CES from the probably unfair placement in the ashes of this years show.

Both fascinating, but also eye-wrinkling were reactions to the singing robot chorus and dance group of iPal robots from AvatarMind. Already available for developers, the robots were suggested for use in education, and in entertainment of elderly. Well, thumbs up and true fascination for the technical challenges behind the real-life robots. But for the applications suggested, we couldn’t help a flashback of emphatic enthusiasm at level with the wooden doll chorus welcoming Shrek to charming Duloc — or the concerted swarm of robots with own will, keenly reminding us of the ethical challenges ahead with AI robotics.

In the more fascinating end of the spectre we saw a range of surveillance robots, ranging from the Robots will interact in environments of far more variation than autonomous cars and therefore introduces a far greater order of complexity. In the highly interesting “Designing AI Powered Robots” panel, Andrew Stein illustrated how their thinking behind development of software and hardware for Anki Cozmo implies parallel processing and execution of vision and sensing, animatronics, AI and interactive content. Significant work was put into creating traits and behaviors by use of AI in making Cozmo appear and feel emphatic (e.g. eye movement) — and in responding to the environment. Playing games, lifting objects, and interpreting your behavior are just some of the tricks and treats of this € 150 AI robotainment gadget.

In the same panel we were introduced to Kindered producing semi-AI-autonomous robot. That is, an AI powered robot in kind of ABB style with a human “failover”. George Babu of Kindered showed a fascinating video of an implementation of the Kindered robot made in collaboration with the GAP where the robot was used to sort items. Computer vision identified the objects, and the robot would sort to different channels based on the vision identification. If an object was not identified, the human monitoring would quickly assist the robot, providing real-time algorithm manipulation and training, making the robot perform better next time it encountered the same or similar object. Also here, it was said that 3 trillion dollars worth of automation was awaiting the future of AI robots.

Also in the robotics space NVIDIA impressed. Here the Jetson TX2 supercomputer, Jetson SDK and Isaac simulation tool constitute a full AI robotics development environment for building, training, and testing robotics in virtual simulations before deploying to edge. It needs little convincing on the rationale and absolute necessity for testing AI robotics in simulated environments. In addition, the compelling case here was how fast training of machine learning algorithms could take place as you simply multiplied the simulation training environments and fed training data into a range of algorithms like demonstrated in this video.

Then to the cuter things of AI where we met Aibo, Sony’s little pet-dog robot. We were not the only ones finding it the cutest AI gadget on the show. Aibo has been around for some time, but now is equipped with AI/machine learning so it will even recognize and grow closer bond with the ones in the family taking most care of it.

After seeing Aibo live, it is no longer far fetched in our minds how people will start making bonds with robots. Yet, it’s still felt as a bit of a distance to the step of having a funeral for a robot dog, as this CES videolog on Aibo from the Verge suggests has taken place in Japan. If you are ready to have a clean dog, craving attention, and will devotedly test bonding with an electronic pet, Aibo unfortunately neither comes easy to buyers in Europe and US, nor cheap. As of current Aibo is sold only in Japan. Cutting you back just short of € 1,500 there may be a need for approval before we go the Sony kennel an pick one up to become Jayway’s new agency dog. And if we do, can we trust it not to have code which turns it into an an aggressive “Aibo”llterrier?

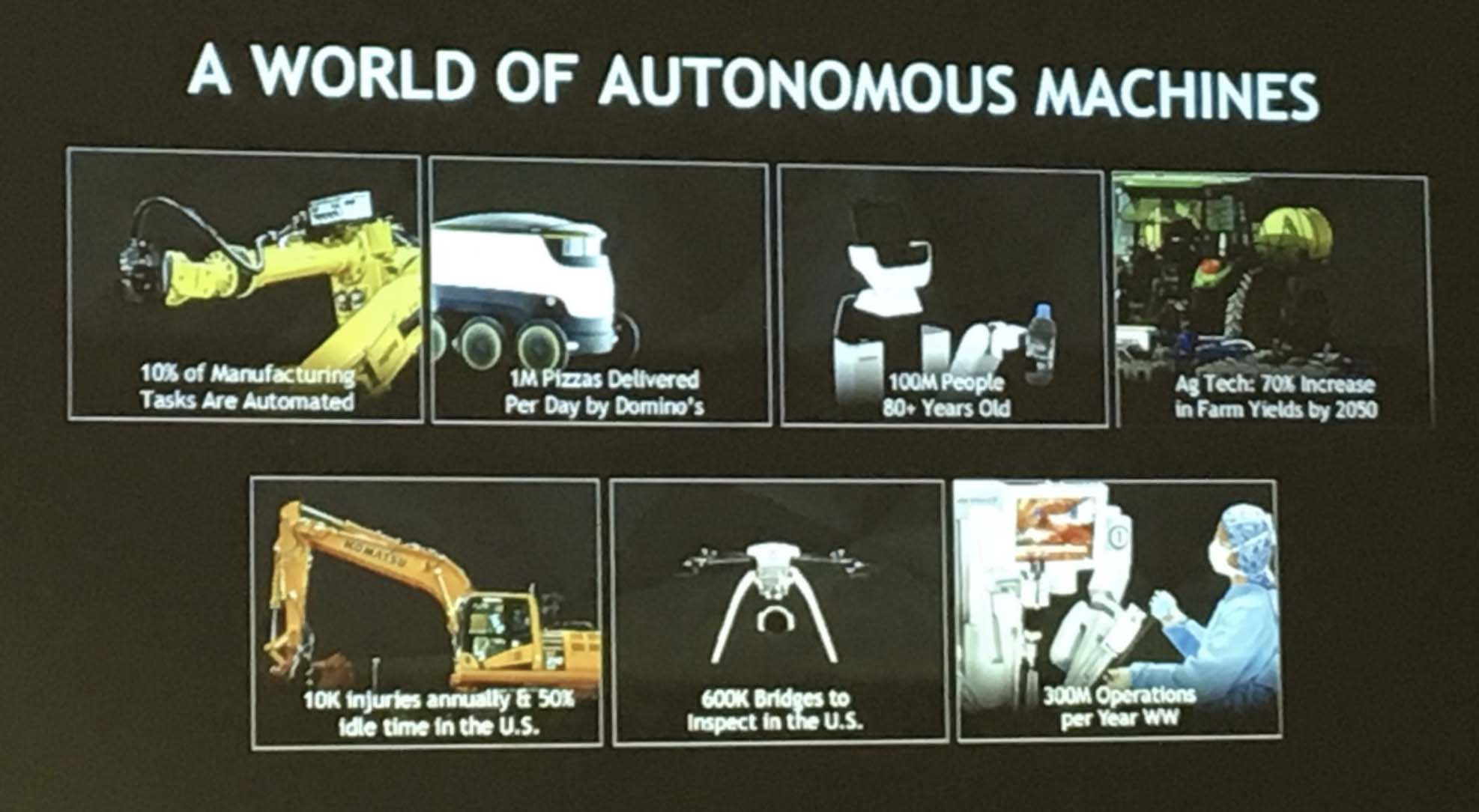

In the debates of the panels we listened in on, little focus was given to the security and control elements so important to AI robotics. In the “Designing AI Powered Robots” panel it was agreed that significant challenges in progressing AI robotics were on the need for data minimization and standardisation to run efficient edge operations. Also high on the wishlist was cheaper hardware components, especially optics and sensors, and improvements to manipulation and training. Privacy and data control was mentioned and questions were asked. However, answers lacked substance and not much discussion was devoted to how we ensure good AI over aggressive and malevolent AI. Instead the world of autonomous machines was largely painted in a glorified picture all its application areas and part of a blooming $3 trillion market on AI opening in front of our feet.

Now, reflections over CES have sunk in. There is no escaping that while we are living in the most interesting technological times, we also face baffling ethical dilemmas of new magnitude as the separation between the machines and the human continues to fade. In the world of smart robots, the technology providers cannot have an easy backdoor. “Just being a AI robotics enabler” is a whole other ballpark compared to “just being a gun producer” and need being treated accordingly. We as software developers must take responsibility.

When software stacks are embedded in machines enabling the machines to learn, the CES 2018 impressions form a sharp reminder. We have an inherent and inescapable responsibility not only to write, test and release AI code that progresses humanity, but also make code that protects against malevolent AI. Vegas is not usually not the high place of moral and ethics. But maybe the rains causing Tuesday’s momentary black-out at CES 2018 was a sign of software makers needing to pause, reflect, and more importantly, enact recommendations of watchdogs like those found in the AINow report? At least after seeing that fast progress towards AI Robotics packaged as complete systems for development like NVIDIA Jetson, it is clear to us that Asimov’s Law of Robotics from his science fiction writings in 1940 are challenged at increasing pace. If we are not careful; to a degree where what was firstly human generated code becomes self-generating and possibly outside of human control. The humiliation can be quite larger than just losing a game of scrabble.

In Part 2 of this blogpost we will cover Smart Cities & Smat Gadgets/Home — and also provide some of our learnings from getting most out of CES. In progress, and out soon.